The UK Age Appropriate Design Code: Childproofing the Digital World

“A generation from now, I believe we will look back and find it peculiar that online services weren’t always designed with children in mind. When my grandchildren are grown and have children of their own, the need to keep children safer online will be as second nature as the need to ensure they eat healthy, get a good education or buckle up in the back of a car.”

– Information Commissioner Elizabeth Denham

In May 2018, the European Union’s General Data Protection Regulation (GDPR) went into effect, recognizing for the first time within the European Union (EU) that children’s personal data warrants special protection. The United Kingdom’s Data Protection Act 2018 adopted GDPR within the United Kingdom and, among other things, charged the Information Commissioner’s Office (ICO) with developing a code of practice to protect children’s personal data online. The result is the Age Appropriate Design Code (also referred to as the Children’s Code), an ambitious attempt to childproof the digital world.

The Internet was not built with children in mind, yet children are prolific users of the Internet. The Children’s Code, which is comprised of fifteen “Standards,” seeks to correct that incongruity by requiring online services that children are likely to use to be designed with their best interests in mind.

For over the last twenty years, the U.S. Children’s Online Privacy Protection Act (COPPA) has been the primary source of protection for children’s privacy online. COPPA protects the privacy of internet users under 13 years old, primarily by requiring informed, verifiable consent from a parent or guardian. The Children’s Code, however, has much grander aspirations. It protects all children under 18 years old, asking companies to reimagine their online services from the bottom up.

The foundational principle of the Children’s Code calls for online services likely to be accessed by children under 18 years old to be designed and developed with the best interests of the child as a primary consideration. The Children’s Code is grounded in the United Nations Convention on the Rights of the Child (UNRC), which recognizes that children have several rights, including the rights to privacy and to be free from economic exploitation; to access information; to associate with others and play; and to have a voice in matters that affect them.

To meet the best interests of the child, online services must comply with each of the applicable fifteen Standards. Those Standards are distilled below.

1. Assessing and Mitigating Risks

Compliance with the Children’s Code begins with a Data Protection Impact Assessment (DPIA), a roadmap to compliance and a requirement for all online services that are likely to be accessed by children under 18 years old. The DPIA must identify the risks the online service poses to children, the ways in which the online service mitigates those risks, and how it balances the varying and sometimes competing rights and interests of children of different age groups. If the ICO conducts an audit of an online service or investigates a consumer complaint, the DPIA will be among the first documents requested.

The ICO suggests involving experts and consulting research to help with this process. This might not be feasible for all companies. At a minimum, however, small- and medium-sized companies with online services that create risks to children will be expected to keep up to date with resources that are publicly available. More will be expected of larger companies.

While the Internet cannot exist without commercial interests, the primary consideration must be the best interests of the child. If there is a conflict between the commercial interests of an online service and the child’s interests, the child’s interests must prevail.

2. Achieving Risk-Proportionate Age Assurance

To adequately assess and mitigate risk, an online service must have a level of confidence in the age range(s) of its users that is proportionate to the risks posed by the online service. The greater the risk, the more confidence the online service must have.

The ICO identifies several options to obtain what it calls “age assurance,” which can be used alone or in combination depending on the circumstances. Age assurance options include self-declaration by users (a/k/a age gates), artificial intelligence (AI), third-party verification services, and hard identifiers (e.g., government IDs). Less reliable options, like age gates, are only permitted in low-risk situations or when combined with other age assurance mechanisms.

Achieving an adequate level of confidence will be challenging. The Verification of Children Online (VoCO), a multi-stakeholder child online safety research project led by the U.K.’s Department for Digital, Culture, Media & Sport (DCMS), is attempting to address that challenge. The VoCO Phase 2 Report provided the following potential flow as an example:

[F]or a platform that needs a medium level of confidence, a user could initially declare their age as part of the onboarding process, and alongside this an automated age assurance method (such as using AI analysis) could be used to confirm the declared age. If this measure suggests a different age band than that stated, which reduces confidence in the initial assessment, a request could be made to validate the user’s age through a verified digital parent.

Ultimately, if an online service is unable to reach an appropriate level of confidence, it has two options: 1) take steps to adequately reduce the level of risk; or 2) apply the Children’s Code to all users, even adults.

3. Setting High Privacy by Default

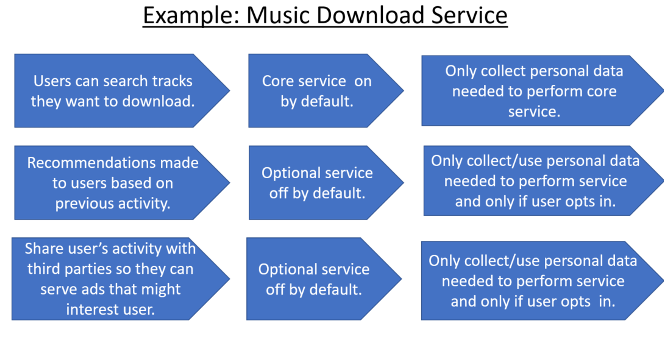

For all children, high privacy must be the default setting. This means an online service may only collect the minimum amount of personal data needed to provide the core or most basic service. Additional, optional elements of the online service, for example to personalize offerings, would have to be individually selected and activated by the child. To illustrate this point, the ICO uses the example of a music download service.

High privacy by default also means that children’s personal information cannot be used in ways that have been shown to be detrimental. Based on specific Standards within the Children’s Code, this means the following must be turned off by default:

- Profiling (for example, behavioral advertising);

- Geolocation tracking;

- Marketing and advertising that does not comply with The Committee of Advertising Practice (CAP) Code in the United Kingdom;

- Sharing children’s personal data; and

- Utilizing nudge techniques that lead children to make poor choices.

To turn these on, the online service must be able to demonstrate a compelling reason and adequate safeguards.

4. Making Online Tools Available

Children must be given the tools to exercise their privacy rights, whether it be opting into optional parts of a service or asking to delete or get access to their personal information. The tools should be highlighted during the start-up process and must be prominently placed on the user’s screen. They must also be tailored to the age ranges of the users that access the online service. The ICO encourages using easily identifiable icons and other age-appropriate mechanisms.

5. Communicating Age-Appropriate Privacy Information

The Children’s Code requires all privacy-related information to be communicated to children in a way they can understand. This includes traditional privacy policies, as well as bite-sized, just-in-time notices. To help achieve this daunting task, the ICO provides age-based guidance. For example, for children 6 to 9 years old, the ICO recommends providing complete privacy disclosures for parents, while explaining the basic concepts to the children. If a child in this age range attempts to change a default setting, the ICO recommends using a prompt to get the child’s attention, explaining what will happen and instructing the child to get a trusted adult. The ICO also encourages the use of cartoons, videos and audio materials to help make the information understandable to children in different age groups and at different stages of development.

For connected toys and devices, the Children’s Code requires notice to be provided at the point of purchase, for example, a disclosure or easily identifiable icon on the packaging of the physical product. Disclosures about the collection and use of personal data should also be provided prior to setup (e.g., in the instructions or a special insert). Anytime a connected device is collecting information, it should be obvious to the user (e.g., a light goes on), and collection should always be avoided when in standby mode.

6. Being Fair

The Children’s Code expects online services to act fairly when processing children’s personal data. In essence, this means online services must say what they do, and do what they say. This edict applies not just to privacy disclosures, but to all published terms, policies and community standards. If, for example, an online service’s community standards prohibit bullying, the failure to enforce that standard could result in a finding that the online service unfairly collected a child’s personal data.

Initial implementation of the Children’s Code will be a challenge. User disruption is inevitable, as are increased compliance and engineering costs. The return on that initial investment, however, will hopefully make it all worthwhile. If Commissioner Denham’s vision is realized, the digital world will become a safe place for children to socialize, create, play, and learn.

• • •

• • •

If you have more questions about the Age Appropriate Design Code or you want to learn more about our Program, please reach out to us through our Contact page to learn more about our program. Be sure to follow us on Twitter and LinkedIn for more privacy-related updates.